Block Specimen Producer Onboarding

Block Specimen Producer Nodes

Running a block specimen producer node is very input/output (I/O) intensive. Here, the requirements to run a BSP node match that of running go-ethereum full node with some additional provisions discussed below.

Hardware Requirements

The recommended configurations will make sure the sync is not lag prone and the node is able to keep up with the Ethereum network to produce live block specimens.

Minimum

- CPU with 4+ cores

- 16GB RAM

- 1.5TB free storage space to sync the Mainnet

- 8 MBit/sec download Internet service

Recommended

- Fast CPU with 8+ cores

- 32 GB+ RAM

- Fast SSD with >= 1.5TB storage space

- 25+ MBit/sec download Internet service

Software Requirements

Install the given software

- 64-bit Linux, Mac OS

- SSL certificates

- Git, Docker, Docker-compose

- BSP-geth v1.4.0 ships with - Geth v1.11.2

- Go v1.19

- Redis v7, Redis-cli 6.2.5

- Listener TCP and UDP discovery port 30303

- ICMP IPv4 should not be closed by an external firewall

Validator & Operator Prerequisites

Stake

Before reading any further, please make sure that you have:

Staked the Minimum CQT Staking requirements on Moonbeam for BSP.

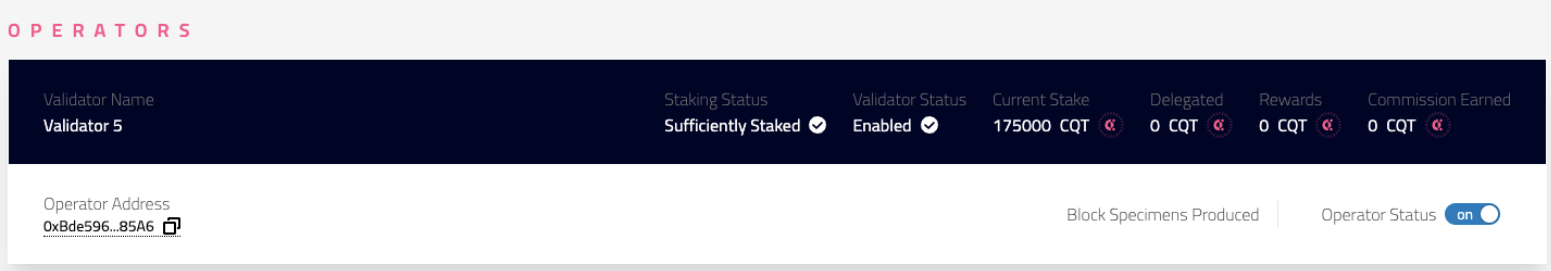

You have turned on your

Operator Statusin the operator dashboard as so:

GLMR

GLMR is needed to pay for gas on Moonbeam in order to send proofs of block specimens. This costs approximately 5 GLMR per day. This should be held at the same address as the Operator Address.

MacOS 12.x (M1/Intel) Installation requirements

Install XCode:

xcode-select --install sudo xcodebuild -license agree

You must also Install brew (for mac m1/intel) with this script.

This installs all the minimum necessary terminal/command-line tools to get started with software development on a mac.

Install Dependencies

Complete Installation Time: 1-1.5 hrs depending on your machine and network.

Install Git, Golang, Redis.

Gitis used as the source code version control manager across all our repositories.Gois the programming language that is used to develop ongo-ethereumand bsp patches, the agent given below is also entirely written in Go.Redisis our in-memory database, cache and streaming service provider.

MacOS 12.x (M1/Intel)

brew install git go redis

Debian/Ubuntu

wget https://golang.org/dl/go1.19.linux-amd64.tar.gz tar -xvf go1.19.linux-amd64.tar.gz sudo mv go /usr/local echo "" >> ~/.bashrc echo 'export GOPATH=$HOME/go' >> ~/.bashrc echo 'export GOROOT=/usr/local/go' >> ~/.bashrc echo 'export GOBIN=$GOPATH/bin' >> ~/.bashrc echo 'export PATH=$PATH:/usr/local/go/bin:$GOBIN' >> ~/.bashrc echo 'export GO111MODULE=on' >> ~/.bashrc source .bashrc # install redis and git apt install git redis-server

Fedora

dnf install git golang redis

RHEL/CentOS

Install Git, Golang, Redis

yum install git go-toolset redis

OpenSUSE/SLES

Install Git, Golang, Redis

zypper addrepo https://download.opensuse.org/repositories/devel:languages:go/openSUSE_Leap_15.3/devel:languages:go.repo zypper refresh zypper install git go redis

BSP-Geth & Lighthouse Setup

Clone the covalenthq/bsp-geth repo

git clone https://github.com/covalenthq/bsp-geth.git cd bsp-geth

Build geth (install go if you don’t have it) and other geth developer tools from the root repo with (if you need all the geth related development tools do a

make allmake geth

Start redis (our streaming service) with the following.

brew services start redis ==Successfully started `redis` (label: homebrew.mxcl.redis)

On Linux:

systemctl start redis

Start

redis-cliin a separate terminal so you can see the encoded bsps as they are fed into redis streams.redis-cli 127.0.0.1:6379>

We are now ready to start accepting stream message into redis locally

Go back to

~/bsp-gethand start geth with the given configuration, here we specify the replication targets (block specimen targets) with redis stream topic keyreplication, insnapsyncmode. Prior to executing, please replace$PATH_TO_GETH_MAINNET_CHAINDATAwith the location of the mainnet snapshot that was downloaded earlier. Everything else remains the same as given below../build/bin/geth --mainnet \ --syncmode snap \ --datadir $PATH_TO_GETH_MAINNET_CHAINDATA \ --replication.targets "redis://localhost:6379/?topic=replication" \ --replica.result --replica.specimen \ --log.folder "./logs/"

Each of the bsp flags and their functions are described below -

--mainnet- lets geth know which network to synchronize with, this can be--ropsten,--goerlietc--syncmode- this flag is used to enable different syncing strategies for geth and afullsync allows us to execute every block from block 0; whilesnapallows us to execute from live blocks--datadir- specifies a local datadir path for geth (note we use “bsp” as the directory name with the Ethereum directory), this way we don’t overwrite or touch other previously synced geth libraries across other chains--replication.targets- this flag points to redis, and lets the bsp know where and how to send the bsp messages (this flag will not function without the usage of either one or both of the flags below, if both are selected a full block-replica is exported)--replica.result- this flag lets the bsp know if all fields related to the block-result specification need to be exported (if only this flag is selected the exported object is a block-result)--replica.specimen- this flag lets the bsp know if all fields related to the block-specimen specification need to be exported (if only this flag is selected the exported object is a block-specimen)bsp-geth also needs a consensus client. We’ve tested and found the lighthouse to be stable and works well. Goto the installation instructions to install it. Then run the lighthouse using:

lighthouse bn \ --network mainnet \ --execution-endpoint http://localhost:8551 \ --execution-jwt $PATH_TO_GETH_MAINNET_CHAINDATA/geth/jwtsecret \ --checkpoint-sync-url https://mainnet.checkpoint.sigp.io \ --disable-deposit-contract-sync

Wait for the eth blockchain to sync to the tip. Connect to the node’s ipc instance to check how far the node is synced

./build/bin/geth attach $PATH_TO_GETH_MAINNET_CHAINDATA/geth.ipc

Once connected, wait for the node to reach the highest known block to start creating live block specimens.

Welcome to the Geth JavaScript console! instance: Geth/v1.10.17-stable-d1a92cb2/darwin-arm64/go1.17.2 at block: 10487792 (Mon Apr 11 2022 14:01:59 GMT-0700 (PDT)) datadir: /Users/<user>/bsp/rinkeby-chain-data-snap modules: admin:1.0 clique:1.0 debug:1.0 eth:1.0 miner:1.0 net:1.0 personal:1.0 rpc:1.0 txpool:1.0 web3:1.0 To exit, press ctrl-d or type exit > eth.syncing { currentBlock: 10487906, healedBytecodeBytes: 0, healedBytecodes: 0, healedTrienodeBytes: 0, healedTrienodes: 0, healingBytecode: 0, healingTrienodes: 0, highestBlock: 10499433, startingBlock: 10486736, syncedAccountBytes: 0, syncedAccounts: 0, syncedBytecodeBytes: 0, syncedBytecodes: 0, syncedStorage: 0, syncedStorageBytes: 0 }Now wait till you see a log from the terminal here with something like this:

INFO [04-11|16:35:48.554|core/chain_replication.go:317] Replication progress sessID=1 queued=1 sent=10960 last=0xffc46213ccd3c55b75f73a0bc29c25780eb37f04c9f2b88179e9d0fb889a4151 INFO [04-11|16:36:04.183|core/blockchain_insert.go:75] Imported new chain segment blocks=1 txs=63 mgas=13.147 elapsed=252.747ms mgasps=52.015 number=10,486,732 hash=8b57c8..bd5c79 dirty=255.49MiB INFO [04-11|16:36:04.189|core/block_replica.go:41] Creating Block Specimen Exported block=10,486,732 hash=0x8b57c8606d74972c59c56f7fe672a30ed6546fc8169b6a2504abb633aebd5c79 INFO [04-11|16:36:04.189|core/rawdb/chain_iterator.go:338] Unindexed transactions blocks=1 txs=9 tail=8,136,733 elapsed="369.12µs"

This can take a few days or a few hours depending on if the source chaindata is already available at the datadir location or live sync is being attempted from scratch for a new copy of blockchain data obtained from syncing with peers. In the case of the latter the strength of the network and other factors that affect the Ethereum network devp2p protocol performance can further cause delays. Once blockchain data state sync is complete and eth.syncing returns false. You can expect to see block-specimens in the redis stream. The following logs are captured from bsp-geth service as the node begins to export live Block Specimens.

The last two logs show that new block replicas containing the block specimens are being produced and streamed to the redis topic

replication. After this you can check that redis is stacking up the bsp messages through the redis-cli with the command below (this should give you the number of messages from the stream)$ redis-cli 127.0.0.1:6379> xlen replication 11696

If it doesn’t - the BSP producer isn't producing messages! In this case please look at the logs above and see if you have any WARN / DEBUG logs that can be responsible for the disoperation. For quick development iteration and faster network sync - enable a new node key to quickly re-sync with the ethereum network for development and testing by going to the root of

go-ethereumand running the bootnode helper.NOTE: To use the bootnode binary execute make all in place of make geth, this creates all the additional helper binaries that bsp-geth ships with.

./build/bin/bootnode -genkey ~/.ethereum/bsp/geth/nodekey

IPFS-Pinner Setup

ipfs-pinner is interface to the storage layer of the network. It's a custom IPFS node with pinning service support for web3.storage and pinata; content archive manipulation etc. It is meant for uploading/fetching network artifacts (like block specimens and block results or any other processed block data) files of the Covalent Decentralized Network.

Clone and build ipfs-pinner (in a separate folder) We store the block specimens in the ipfs network. Bsp-agent interacts with the ipfs-pinner server to handle the storage/retrieval needs of the network.

$ cd .. $ git clone --depth 1 --branch v0.1.13 https://github.com/covalenthq/ipfs-pinner.git ## update if newer release is available $ cd ipfs-pinner $ make clean server-dbg

Use direnv to export WEB3_JWT in ipfs-pinner repo, then you can run the ipfs-pinner

$ cat .envrc export WEB3_JWT="<<ASK_ON_DISCORD>>" $ make run Computing default go-libp2p Resource Manager limits based on: - 'Swarm.ResourceMgr.MaxMemory': "8.6 GB" - 'Swarm.ResourceMgr.MaxFileDescriptors': 30720 Applying any user-supplied overrides on top. Run 'ipfs swarm limit all' to see the resulting limits. 2023/06/06 12:33:05 Listening...

With this, the ipfs-pinner is setup and can upload/fetch network artifacts.

BSP-Agent Setup

Install Dependencies

Install direnv.

MacOS 12.x (M1/Intel)

brew install direnv

Debian/Ubuntu

apt install direnv

Fedora

dnf install direnv

RHEL/CentOS

yum install direnv

SLES/OpenSUSE

zypper install direnv

direnv is used for secret management and control. Since all the necessary parameters to the agent that are sensitive cannot be passed into a command line flag. Direnv allows for safe and easy management of secrets like ethereum private keys for the operator accounts on the Covalent Network and redis instance access passwords etc. As these applications are exposed to the internet on http ports it’s essential to not have the information be logged anywhere. To enable direnv on your machine add these to your ~./bash_profile or ~./zshrc depending on which you use as your default shell after installing it using brew.

For bash users - add the following line to your ~/.bashrc

eval "$(direnv hook bash)"

zsh users - add the following line to your ~/.zshrc

eval "$(direnv hook zsh)"

After adding this line do not forget to source your bash / powershell config with the following, by running it in your terminal.

source ~/.zshrc source ~/.bashrc

Build & Run BSP-Agent From Source

Next we’re going to install the agent that can transform the specimens to AVRO encoded blocks, prove that their data contains what is encoded and upload them to an object store. Clone the

covalenthq/bsp-agentrepo in a separate terminal and build.git clone --depth 1 --branch v1.4.8 https://github.com/covalenthq/bsp-agent.git cd bsp-agent make build

Add an

.envrcfile to~/bsp-agentand add the private key to your operator account address. (See below on how to do this for this workshop)cd bsp-agent touch .envrc

Here we set up the required env vars for the

bsp-agent. Other variables that are not secrets are passed as flags. Add the entire line below to the.envrcfile with the replaced keys, rpc url and ipfs service token, save the file.export MB_RPC_URL=*** export MB_PRIVATE_KEY=***

allow direnv to catch the exported constant and enable it with the direnv allow command.

direnv allow .

NOTE: You should see something like this if the env variables have been correctly exported and ready to use. If you don’t see this prompt in the terminal please enable/install direnv using the instructions on page 1 of this guide.

direnv: loading ~/Documents/covalent/bsp-agent/.envrc direnv: export +MB_PRIVATE_KEY +MB_RPC_URL

Make sure that you replace

$PROOF_CHAIN_CONTRACT_ADDRwith the new copied “proof-chain” contract address in command below for the--proof-chain-addressflag and create a bin directory at~/bsp-agentto store the block- specimens binary files withmkdir -p bin/block-ethereum

NOTE: Moonbeam Proof-Chain Address:

0x4f2E285227D43D9eB52799D0A28299540452446EAssuming the ipfs-pinner is running (last section) at the default

http://127.0.0.1:3001/, we can now start thebsp-agent:$ cd ../bsp-agent $ ./bin/bspagent \ --redis-url="redis://username:@localhost:6379/0?topic=replication" \ --avro-codec-path="./codec/block-ethereum.avsc" \ --binary-file-path="./bin/block-ethereum" \ --block-divisor=35 \ --proof-chain-address=0x4f2E285227D43D9eB52799D0A28299540452446E \ --consumer-timeout=10000000 \ --log-folder ./logs/ \ --ipfs-pinner-server "http://127.0.0.1:3001"

Each of the agent’s flags and their functions are described below (some may have been taken out for simplifying this workshop) -

--redis-url- this flag tells the agent where to find the bsp messages, at which stream topic key (replication) and what the consumer group is named with the field after # which in this case is replicate, additionally one can provide a password to the redis instance here but we recommend by adding the line below to the .envrcexport REDIS_PWD=your-redis-pwd--codec-path- tells the bsp agent the relative path to the AVRO .avsc files in the repo, since the agent ships with the corresponding avsc files this remains fixed for the time being--binary-file-path- tells the bsp if local copies of the block-replica objects being created are to be stored in a given local directory. Please make sure the path (& directory) pre-exists before passing this flag.--block-divisor- allows the operator to configure the number of block specimens being created, the block number divisible only by this number will be extracted, packed, encoded, uploaded and proofed.--proof-chain-address- specifies the address of the proof-chain contract that has been deployed to the Moonbeam network.--consumer-timeout- specifies when the agent stops accepting new msgs from the pending queue for encode, proof and upload.--log-folder- specifies the location (folder) where the log files have to be placed. In case of error (like permission errors), the logs are not recorded in files.--ipfs-pinner-server- specifies the http url where ipfs-pinner server is listening. By default, it is http://127.0.0.1:3001NOTE: if the

bsp-agentcommand above fails with a message about permission issues to access~/.ipfs/*, runsudo chmod -R 700 ~/.ipfsand try again.If all the cli-flags are administered correctly (either in the makefile or the go run command) you should be able to see something like this from logs

time="2022-04-18T17:26:47Z" level=info msg="Initializing Consumer: fb78bb1c-1e14-4905-bb1f-0ea96de8d8b5 | Redis Stream: replication-1 | Consumer Group: replicate-1" function=main line=167 time="2022-04-18T17:26:47Z" level=info msg="block-specimen not created for: 10430548, base block number divisor is :3" function=processStream line=332 time="2022-04-18T17:26:47Z" level=info msg="stream ids acked and trimmed: [1648848491276-0], for stream key: replication-1, with current length: 11700" function=processStream line=339 time="2022-04-18T17:26:47Z" level=info msg="block-specimen not created for: 10430549, base block number divisor is :3" function=processStream line=332 time="2022-04-18T17:26:47Z" level=info msg="stream ids acked and trimmed: [1648848505274-0], for stream key: replication-1, with current length: 11699" function=processStream line=339 ---> Processing 4-10430550-replica <--- time="2022-04-18T17:26:47Z" level=info msg="Submitting block-replica segment proof for: 4-10430550-replica" function=EncodeProveAndUploadReplicaSegment line=59 time="2022-04-18T17:26:47Z" level=info msg="binary file should be available: ipfs://QmUQ4XYJv9syrokUfUbhvA4bV8ce7w1Q2dF6NoNDfSDqxc" function=EncodeProveAndUploadReplicaSegment line=80 time="2022-04-18T17:27:04Z" level=info msg="Proof-chain tx hash: 0xcc8c487a5db0fec423de62f7ac4ca81c630544aa67c432131cabfa35d9703f37 for block-replica segment: 4-10430550-replica" function=EncodeProveAndUploadReplicaSegment line=86 time="2022-04-18T17:27:04Z" level=info msg="File written successfully to: /scratch/node/block-ethereum/4-10430550-replica-0xcc8c487a5db0fec423de62f7ac4ca81c630544aa67c432131cabfa35d9703f37" function=writeToBinFile line=188 time="2022-04-18T17:27:04Z" level=info msg="car file location: /tmp/28077399.car\n" function=generateCarFile line=133 time="2022-04-18T17:27:08Z" level=info msg="File /tmp/28077399.car successfully uploaded to IPFS with pin: QmUQ4XYJv9syrokUfUbhvA4bV8ce7w1Q2dF6NoNDfSDqxc" function=HandleObjectUploadToIPFS line=102 time="2022-04-18T17:27:08Z" level=info msg="stream ids acked and trimmed: [1648848521276-0], for stream key: replication-1, with current length: 11698" function=processStream line=323

If you see the above log, you’re successfully running the entire block specimen producer workflow. The BSP-agent is reading messages from the redis streams topic, encoding, compressing, proving and uploading it to the gcp bucket in segments of multiple blocks at a time.

If however, that doesn’t happen and the agent fails and isn’t able to complete the workflow, fear not! It will atomically fail and the messages will be persisted in the stream where they were being read from! So when you restart correctly the same messages will be reprocessed till full success.

Please note any ERR / WARN / DEBUG messages that could be responsible for the failure. The messages should be clear enough to pinpoint the exact issue. Additionally, get support from Covalent's discord community!

Sample Systemd Service Units

If the block specimen stack is running successfully and producing block specimens, congrats! As a way to manage the services, you might want to use systemd. We next provide sample systemd service files, so that any crash in one of the components would restart that component, rather than the system halting. Don't forget to replace the placeholders with actual values in these sample files.

BSP-Geth - Service Unit File

[Unit] Description=Bsp Geth service Wants=network-online.target After=network.target [Service] User=ubuntu Group=ubuntu Type=simple WorkingDirectory=/home/ubuntu/covalent/bsp-geth/ ExecStart=./build/bin/geth --mainnet --log.debug --syncmode snap --datadir $PATH_TO_GETH_MAINNET_CHAINDATA --replication.targets "redis://localhost:6379/?topic=replication" --replica.result --replica.specimen --log.folder "./logs/" Restart=always [Install] WantedBy=multi-user.target

Lighthouse - Service Unit File

[Unit] Description=lighthouse service Wants=network-online.target After=network.target [Service] User=ubuntu Group=ubuntu Type=simple WorkingDirectory=/home/ubuntu/covalent/lighthouse/ ExecStart=./build/bin/lighthouse bn --network mainnet --execution-endpoint http://localhost:8551 --execution-jwt /Users/<user>/repos/experiment/bsp_doc/bsp-geth/data/geth/jwtsecret --checkpoint-sync-url https://mainnet.checkpoint.sigp.io --disable-deposit-contract-sync Restart=always [Install] WantedBy=multi-user.target

IPFS-Pinner - Service Unit File

[Unit] Description=Bsp Agent service Wants=network-online.target [Unit] Description=ipfs-pinner client After=syslog.target network.target [Service] User=ubuntu Group=ubuntu Environment="WEB3_JWT=<<ASK_ON_DISCORD>>" Type=simple ExecStart=/opt/ipfs-pinner/bin/server Restart=always TimeoutStopSec=infinity [Install] WantedBy=multi-user.target

BSP-Agent - Service Unit File

[Unit] Description=Bsp Agent service Wants=network-online.target After=network.target [Service] User=ubuntu Group=ubuntu Type=simple WorkingDirectory=/home/ubuntu/covalent/bsp-agent/ ExecStart=./bin/bspagent --redis-url="redis://username:@localhost:6379/0?topic=replication" --avro-codec-path="/home/ubuntu/covalent/bsp-agent/codec/block-ethereum.avsc" --binary-file-path="/home/ubuntu/covalent/bsp-agent/data/bin/block-ethereum" --block-divisor=35 --proof-chain-address=0x4f2E285227D43D9eB52799D0A28299540452446E --consumer-timeout=10000000 --log-folder /home/ubuntu/covalent/bsp-agent/logs/ --ipfs-pinner-server=http://127.0.0.1:3001/ Restart=always [Install] WantedBy=multi-user.target

Support

If you need any assistance with the onboarding process or have technical and operational concerns please contact Rodrigo or Niall in the Covalent Network Discord.